As an ecommerce website owner, you want to ensure that your site is easily discoverable by search engines and potential customers.

In this blog post, we'll explore the importance of the robots.txt file and how it can affect your ecommerce website's visibility on search engines. We'll also provide tips on how to properly set up and maintain your robots.txt file for optimal results. So whether you're new to ecommerce or looking to improve your website's SEO, this article has something for you.

What is a Robots.txt File?

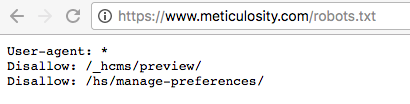

A robots.txt file instructs search engine crawlers on which pages or files to avoid crawling or indexing. It's crucial for ecommerce sites as it prevents duplicate content penalties and preserves link equity. Additionally, it can block sensitive information like login pages. However, creating a robots.txt file that doesn't block essential pages requires careful consideration.

To Crawl or Not to Crawl? That is the Question

Without a robots.txt file in place, your ecommerce website is completely open and crawl-able, which sounds like a good thing. But using up bandwidth on irrelevant and outdated content may come at the expense of crawling and indexing important and valuable pages. You might even have some key pages that get skipped entirely.

If left unchecked, a search engine robot may crawl your shopping cart links, wishlist links, admin login pages, your development site or test links, or other content that you might not want showing up in the search results. Crawls can find personal information, temporary files, admin pages, and other pages that contain information that you may not have realized was publicly accessible.

It is important to remember that each website has a “crawl budget”, a limited number of pages that can be included in a crawl. You want to make sure that your most important pages are getting indexed and that you’re not wasting your crawl with temporary files.

Why is Robots.txt important for Ecommerce Websites?

As the owner of an ecommerce website, you must understand how vital it is for search engines to index your site. This is where robots.txt comes in. Robots.txt is a file that tells search engine crawlers which pages or files on your website they should or should not explore.

It's a vital tool for restricting access to your website and ensuring that search engines prioritize the most critical content.

1. Privacy and Security

Ecommerce websites usually contain sensitive data from customers, such as personal and financial information. Robots.txt can be used to restrict search engine bots from crawling and indexing sensitive information-containing directories, thereby protecting your clients' privacy and enhancing their trust in your platform.

2. Preventing Duplicate Content

Duplicate content might hurt your website's search engine rankings. With their numerous product pages and filters, ecommerce sites are especially prone to duplicate content. By properly creating Robots.txt, you can instruct search engine bots to ignore specific parameters or dynamically created pages, reducing the possibility of duplicate content issues.

3. Managing Crawl Budget

The crawl budget is the amount of resources that search engines allocate to crawling and indexing webpages. Controlling the crawl budget properly is crucial for large ecommerce systems with hundreds of pages. Robots.txt can help with the priority of crucial pages for indexing, ensuring that search engine bots make the most use of their limited resources, enhancing your site's exposure in search results.

4. SEO Optimization

Robots.txt can be used to control how search engines find and index your website. By preventing access to unnecessary or low-value pages, you may divert search engine attention to your most important pages, such as product listings, category pages, and valuable content. This can improve your site's overall SEO performance as well as your ranks for related keywords.

Common Mistakes to Avoid in Robots.txt for Ecommerce Websites

When creating or managing a Robots.txt file for your ecommerce website, it's crucial to avoid certain common mistakes that can inadvertently impact your site's visibility and SEO performance. Here are some key mistakes to avoid:

1. Blocking Important Pages

Unintentionally restricting crucial pages that you want search engines to crawl and index is one of the most critical blunders. Check your Robots.txt directives to make sure that important pages like your homepage, product pages, category pages, and other key content are not banned. Check that the "Allow" directives for these pages are correctly supplied.

2. Allowing Sensitive Content

While it is critical to contain the required instructions to protect sensitive material, such as customer data and administrative areas, it is also critical not to allow search engines to index such sensitive content mistakenly.

Check that sensitive information-containing directory are properly banned in your Robots.txt file to prevent unwanted access and associated security threats.

3. Ignoring Mobile Version

With the growing importance of mobile optimization, it's critical not to overlook or dismiss your ecommerce website's mobile version. Check that your Robots.txt file contains the necessary directives to allow search engine bots to crawl and index the mobile version, if relevant.

To avoid accessibility difficulties, separate mobile subdomains or subdirectories should be addressed.

4. Incorrect Syntax

Robots.txt files have a certain syntax that must be followed in order for search engine bots to appropriately read the directives. Syntax errors, such as missing slashes, inappropriate directive placement, or erroneous usage of wildcards, might have unforeseen repercussions. To ensure correctness and avoid syntax problems, double-check the syntax of your Robots.txt file.

5. Lack of Testing

Before putting your Robots.txt file on your live ecommerce website, you must properly test it. To evaluate your file and discover any potential errors or unexpected repercussions, use robots.txt testing tools like Google's robots.txt testing tool.

Testing assists you in identifying and resolving issues that may hinder search engine bots from crawling and indexing your content properly.

Learn More

While a robots.txt file can be useful to block content that you don’t want indexed, sometimes robots.txt files inadvertently block content that website owners do want crawled and indexed. If you're having troubles with some of your key pages not getting indexed, the robots.txt file is a good place to check to identify the issue. It's a good idea to monitor what's in your robots.txt file and keep it updated.

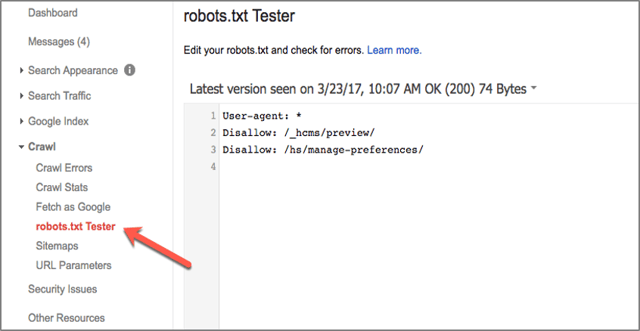

There are a number of SEO tools that you can use to see what a robots.txt file may be blocking and one of the best tools is found, for free, in Google Search Console.

With the Google Search Console robots.txt Tester, you can test specific pages to see if they are being crawled or not. Don't forget to leverage your XML Sitemap to list the pages you do want Google to crawl. If you're auto-generating your XML Sitemap, you might unintentionally be including pages that you're also disallowing in your robots.txt file. Keeping an eye on Google Search Console will alert you to errors like these in both your sitemap file and your robots.txt file.

A robots.txt file can be the best thing to happen to your ecommerce website, so if you're not utilizing its power, it's time to get started. If you're need help with setting up your robots.txt file, contact our expert for personalized assistance.